How to increase GPU training throughput

Table of Contents

This is a guide for increasing GPU training throughput given a batch of training run, as opposed to increasing instantaneous throughput for one training run. Obviously, doing the latter can increase training throughput, but that is not in the scope of this guide.

Use case: suppose we are sweeping hyper-parameters and there are N training runs. We want to finish these N runs as fast as possible.

Technique - Improve disk IO #

If the training data is a large set of files, e.g. 150GB ImageNet, read them from the local drive, instead of from a network drive. Same applies if we write a lot of data to disk.

Technique - Parallelize training #

If the machine is not resource-bound, then running another training in parallel should increase throughput. This is especially true when GPU utilization is not close to 100% and GPU memory can fit another copy of our model. Other resources to be mindful of are CPU and memory; disk IO; and network IO. If one of the resources is close to 100% utilization, parallelizing training is probably not going to increase training throughput. Check sections below to see how to check resource utilizations.

There are simple and advanced ways to parallelize training. Here are two:

Parameterize our Python script (see argparse). Run the script multiple times, each with different parameters.

Use a more generic hyper-paramater search library such as Ray Tune.

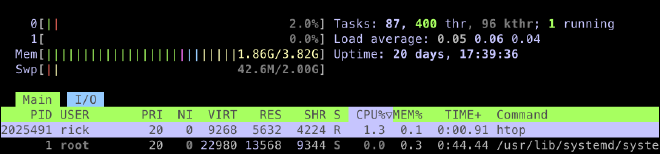

How to check CPU and memory utilization? #

Read CPU and memory utilization by running htop at the terminal.

To install htop #

CentOS:

sudo yum install htop

Ubuntu:

sudo apt-get install htop

How to check GPU and memory utilization? #

Read utilization by running nvidia-smi at the terminal.

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.54.15 Driver Version: 550.54.15 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 Tesla T4 Off | 00000000:00:04.0 Off | 0 |

| N/A 44C P8 12W / 70W | 0MiB / 15360MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

How to check disk IO utilization #

TBD

How to check network IO utilization #

TBD

Summary #

By using local drive and parallelize training runs, we can improve GPU training throughput.

You might also be interested in:

- a code starter kit for training MNIST with Ray, PyTorch Lightning and PyTorch.

- a runtime starter kit based in docker for running PyTorch and TensorFlow code on CPU or GPU.